I am a senior researcher at Microsoft Research AI4Science where I work on machine learning methods for chemistry with Frank Noé.

I want to understand how machine learning can help us to solve critical problems in the sciences and to build new, sustainable technology.

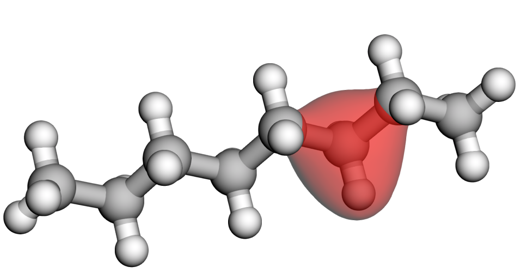

At Microsoft, I spent two years working on electronic structure: developing an ab initio foundation model for wavefunctions using deep Quantum Monte Carlo.

This is a new, unsupervised approach high-accuracy quantum chemistry that can tackle multireferential problems like bond breaking.

We also worked on extracting electron densities from our QMC wavefunctions.

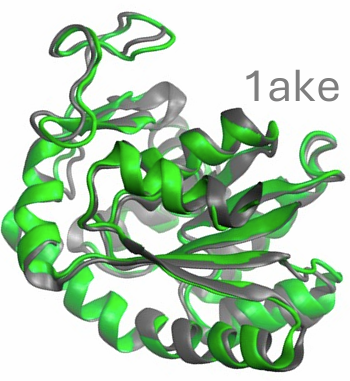

I now work on modelling protein dynamics.

I am a senior researcher at Microsoft Research AI4Science where I work on machine learning methods for chemistry with Frank Noé.

I want to understand how machine learning can help us to solve critical problems in the sciences and to build new, sustainable technology.

At Microsoft, I spent two years working on electronic structure: developing an ab initio foundation model for wavefunctions using deep Quantum Monte Carlo.

This is a new, unsupervised approach high-accuracy quantum chemistry that can tackle multireferential problems like bond breaking.

We also worked on extracting electron densities from our QMC wavefunctions.

I now work on modelling protein dynamics.

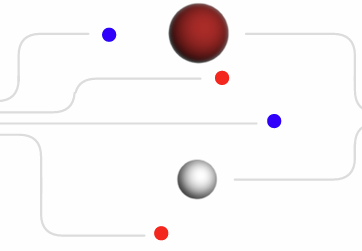

I also have a strong interest in Bayesian experimental design and active learning. This was the main topic of my PhD in Statistical Machine Learning at the University of Oxford, supervised by Yee Whye Teh and Tom Rainforth in the Computational Stats and Machine Learning Group in the Department of Statistics. I was awarded the prestigious Corcoran Memorial Prize for my PhD thesis. Before starting my PhD, I studied mathematics at Cambridge where my Director of Studies was Julia Gog.

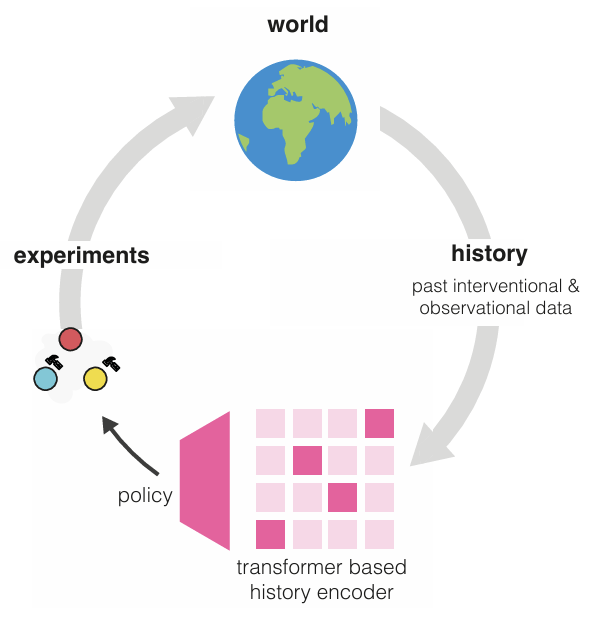

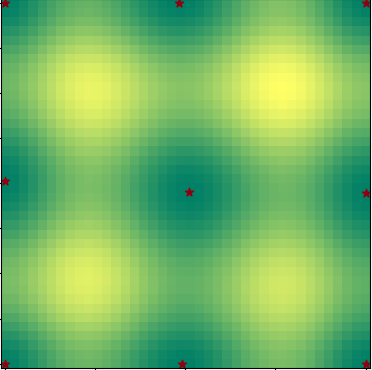

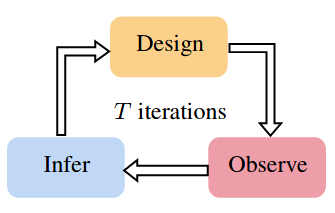

A large part of my PhD work was on Bayesian experimental design: how do we design experiments that will be most informative about the process being investigated? One approach is to optimize the Expected Information Gain (EIG), which can be seen as a mutual information, over the space of possible designs. In my work, I have developed variational methods to estimate the EIG, stochastic gradient methods to optimize over designs, and how to obtain unbiased gradient estimators of EIG. In more recent work, we have studied policies that can choose a sequence of designs automatically. These two talks (Corcoran Memorial Prize Lecture and SIAM minisymposium) offers introductions to experimental design and my research in this area.

I am also keen on open-source code: highlights include OneQMC, experimental design tools in deep probabilistic programming language Pyro, forward Laplacians, Redis<->Python interfacing, reproducing SimCLR.

Recent work

Scalable emulation of protein equilibrium ensembles with generative deep learning

Sarah Lewis, Tim Hempel, José Jiménez-Luna, Michael Gastegger, Yu Xie, Andrew YK Foong, Victor García Satorras, Osama Abdin, Bastiaan S Veeling, Iryna Zaporozhets, Yaoyi Chen, Soojung Yang, Adam E Foster, Arne Schneuing, Jigyasa Nigam, Federico Barbero, Vincent Stimper, Andrew Campbell, Jason Yim, Marten Lienen, Yu Shi, Shuxin Zheng, Hannes Schulz, Usman Munir, Roberto Sordillo, Ryota Tomioka, Cecilia Clementi, Frank Noé. Science.

A machine learning introduction to Orbformer

Adam Foster. Blog post.

An ab initio foundation model of wavefunctions that accurately describes chemical bond breaking

Adam Foster, Zeno Schätzle, P. Bernát Szabó, Lixue Cheng, Jonas Köhler, Gino Cassella, Nicholas Gao, Jiawei Li, Frank Noé, Jan Hermann. Preprint.

Highly Accurate Real-space Electron Densities with Neural Networks

Lixue Cheng, P. Bernát Szabó, Zeno Schätzle, Derk Kooi, Jonas Köhler, Klaas J. H. Giesbertz, Frank Noé, Jan Hermann, Paola Gori-Giorgi, Adam Foster. Journal of Chemical Physics.

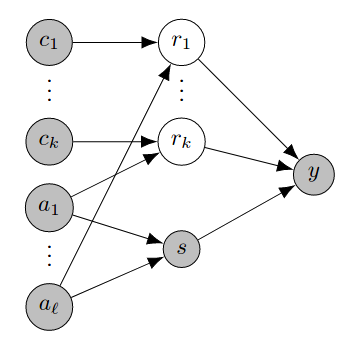

Amortized Active Causal Induction with Deep Reinforcement Learning

Yashas Annadani, Panagiotis Tigas, Stefan Bauer, Adam Foster. NeurIPS 2024.-

Making Better Use of Unlabelled Data in Bayesian Active Learning

Freddie Bickford Smith, Adam Foster, Tom Rainforth. AISTATS 2024.

Concepts in Modern Bayesian Experimental Design (Corcoran Memorial Prize)

Stochastic-gradient Bayesian Optimal Experimental Design with Gaussian Processes

Adam Foster. Blog post.-

Folx - Forward Laplacian for JAX

Nicholas Gao, Jonas Köhler, Adam Foster.

Prediction-Oriented Bayesian Active Learning

Freddie Bickford Smith, Andreas Kirsch, Sebastian Farquhar, Yarin Gal, Adam Foster, Tom Rainforth. AISTATS 2023.

Modern Bayesian Experimental Design

Tom Rainforth, Adam Foster, Desi R Ivanova, Freddie Bickford Smith. Statistical Science.

CO-BED: Information-Theoretic Contextual Optimization via Bayesian Experimental Design

Desi R Ivanova, Joel Jennings, Tom Rainforth, Cheng Zhang, Adam Foster. ICML 2023.

Differentiable Multi-Target Causal Bayesian Experimental Design

Yashas Annadani, Panagiotis Tigas, Desi R. Ivanova, Andrew Jesson, Yarin Gal, Adam Foster, Stefan Bauer. ICML 2023.

Optimising adaptive experimental designs with RL

Adam Foster. Blog post.

ICML 2022 Long Presentation: Contrastive Mixture of Posteriors

GitHub

GitHub Google Scholar

Google Scholar CV

CV